So last week I went to the i-Know 2014 in Graz (Austria) for the first time ever. It featured a workshop on "Information Ergonomics", whose description suggested it to be

- very interesting

- fairly novel

- an adequate forum to present my forthcoming PhD dissertation on knowledge work productivity (which still appears to be fairly unique in the present research environment)

Unfortunately, due to my "workshop only" contribution to the conference, my final paper didn't make it to the organisers' central repository ahead of the event - which is why the workshop web page is more scarce about the details of my paper than it is about others.

To remediate this unfortunate situation, I hereby present you my full paper below:

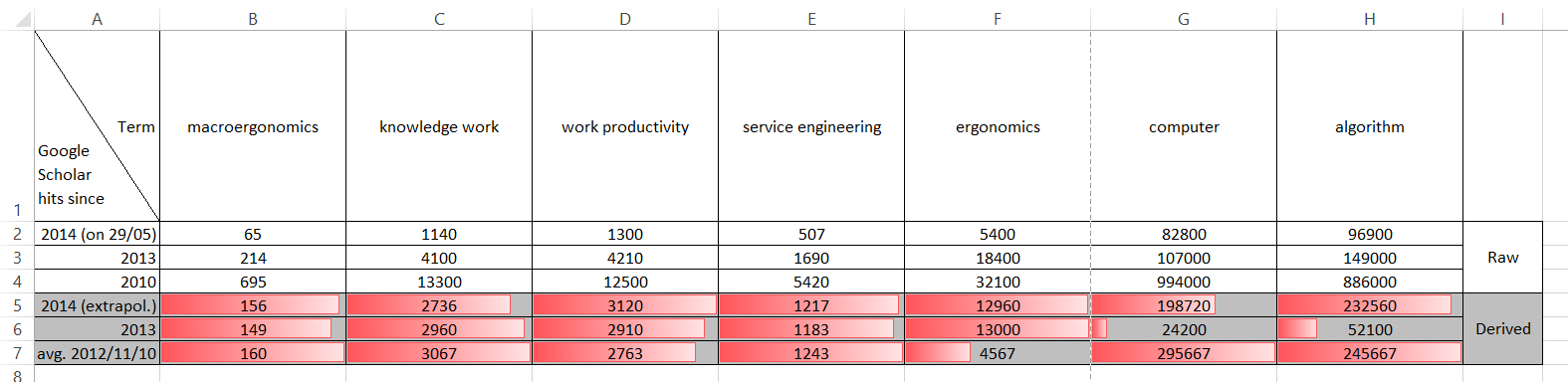

A predictive analytics approach to derive standard times for productivity management of case-based knowledge work: a proof-of-concept study in claims examination of intellectual property rights (IPRs)

There'll be more about it in my dissertation before the end of the year, for now enjoy what you've got and stay tuned. There should also be a joint "Information Ergonomics" paper before the next i-Know. Interesting times...

P.S.: Just for the record - as the "Design Thinking" keynote @ i-Know suggested (and Wikipedia confirms), our 2005 creation of the "collaborative Advanced Design Project (cADP)" (documented in our 2007 ICED paper) is a very early adoption of the 2004 "d.school" approach to teaching - and it's still going strong! Kudos to my co-authors!